Introduction & Background

In contrast to natural intelligence found in humans and animals, Artificial Intelligence or simply AI as it’s commonly referred to is intelligence demonstrated by machines. According to leading AI textbooks, the field is defined as the study of “intelligent agents”: any system that perceives its environment and takes actions that maximize its chances of achieving its goals.

According to some popular accounts, “Artificial Intelligence” refers to machines that mimic “cognitive” functions that humans associate with the human mind, such as “problem solving.”

AI research has traditionally focused on reasoning, planning, learning, natural language processing, perception, and the ability to move and manipulate objects. One of the field’s long-term goals is general intelligence (the ability to solve any problem). Some people believe that if AI continues to advance at its current rate, it may endanger humanity.

AI is a constantly developing field whereby new innovations continue to develop and revolutionize different aspects of computing and society.

In addition AI is also becoming increasingly used within software development to develop exciting new systems.

Early history and beginnings

(Image of the Colossus computer in the United Kingdom)

Calculating machines were invented in antiquity and improved by many mathematicians throughout history, including philosopher Gottfried Leibniz. Charles Babbage designed a programmable computer (the Analytical Engine) in the nineteenth century, but it was never built. The first modern computers were the massive code-breaking machines used during WWII examples been Z3, ENIAC and Colossus.

In the 1940s and 1950s, a small group of scientists from various fields (mathematics, psychology, engineering, economics, and political science) began to discuss the possibility of developing an artificial brain to replicate human-like decision making with computers. Throughout the year of 1956 Artificial Intelligence started to become an established academic discipline.

The information theory of Shannon described digital signals (i.e., all-or-nothing signals). Alan Turing‘s computational theory demonstrated that any type of computation could be described digitally. The close relationship between these concepts suggested that an electronic brain could be built.

Marvin Minsky was a pioneer in the field of artificial intelligence (AI) when he built the first artificial neural net machine, “the SNARC”, in 1951. He was inspired by the work of Walter Pitts and Warren McCulloch, who were the first to describe what later researchers would call a neural network. Minsky’s research paved the way for AI technology and he was recognized as an important leader of the field for the next 50 years.

Alan Turing published a research paper in 1950 in which he speculated on the possibility of creating machines that think for themselves. He observed that “thinking” is difficult to define and devised his famous Turing Test to address this. If a machine could have a conversation that was indistinguishable from a human conversation (via teleprinter), it was reasonable to say that the machine was “thinking.”

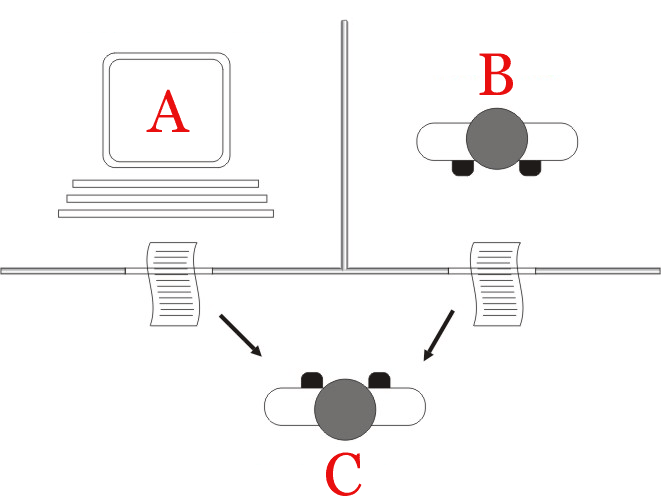

The “Turing test”

The image above is an interpretation of the Turing test whereby player C, the interrogator, is tasked with determining which player – A or B – is a computer and which is a human. The interrogator is only allowed to make a determination based on the answers to written questions.

Alan Turing developed the Turing test in 1950. It assesses a machine’s ability to exhibit intelligent behaviour that is comparable to, or indistinguishable from, that of a human.

Turing proposed that a human evaluator judge natural language conversations between a human and a machine programmed to generate human-like responses.

Artificial Intelligence: The Dartmouth Workshop of 1956

Marvin Minsky, John McCarthy, and two senior scientists from IBM, Claude Shannon and Nathan Rochester, organized the Dartmouth Workshop in 1956. The conference proposal stated that “every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it.”

Ray Solomonoff, Oliver Selfridge, Trenchard More, Arthur Samuel, Allen Newell, and Herbert A. Simon were among the participants, all of whom would go on to create significant projects during the early decades of AI research. At the conference, Newell and Simon debuted the “Logic Theorist”and McCarthy persuaded attendees to accept the term “Artificial Intelligence” as the field’s name.

The 1956 Dartmouth conference is widely regarded as the birth of AI because it gave the field its name, mission, first success, and established some key players in the space.

McCarthy chose the term “Artificial Intelligence” to avoid associations with cybernetics and Norbert Wiener, an influential cyberneticist at the time.

Advancements between 1956-1974

The research projects developed in the years following the Dartmouth Workshop were simply “astonishing” to most people: computers were solving algebra word problems, proving geometric theorems, and learning to speak English.

Few people would have believed that such “intelligent” machine behavior was even possible at the time. In private and public, researchers expressed extreme optimism, predicting that a fully intelligent machine would be built in less than 20 years. DARPA and other government agencies poured money into the new field.

During this time period development was significantly propelled by Government funding and increased computing power been available.

Different types of AI applications

Artificial Intelligence technologies can be integrated into a variety of fields including the following:

NLP (Natural Language Processing)

Natural Language Processing or NLP for short is a type of technology made possible by AI. NLP works by analyzing text data such as words and sentences before attempting to make sense of it.

NLP technology can be found inside many applications such as grammar checkers, auto-suggest searches, auto-correct software and chatbots which can recognize the words been typed and then suggest content based on the software’s understanding of the words.

NLP attempts to replicate the brains understanding of linguistics and make sense of the many different words and their underline meaning.

Voice Recognition

Another field where AI is utilized is voice recognition which similar to NLP is concerned with words however rather than purely analyzing textual information voice recognition systems capture audio from a source such as a microphone and then attempt to convert that data into text that a computer can understand.

From here the computer can process the information and perform different functionality such as opening different applications, checking directions on a map or completing a plethora of other potential tasks.

Conclusion

We hope you found this page on our glossary to be helpful, if so be sure to share it with anyone who may be interested and following AGR Technology for more updates.

Be sure to check out our business services, blog and free software/tools

Relevant content from our glossary:

IaaS (Infrastructure as a service)

LMS (Learning Management System)

Citation(s) & Source(s):

“Artificial intelligence” Wikipedia, 8 Oct. 2001, en.wikipedia.org/wiki/Artificial_intelligence. Accessed 16 Aug. 2021.

“AI Saga” Wikipedia, 13 Oct. 2005, en.wikipedia.org/wiki/History_of_artificial_intelligence. Accessed 30 Aug. 2021.

Public domain extract from National Archives (UK), via Wikimedia Commons

A. M. TURING, I.—COMPUTING MACHINERY AND INTELLIGENCE, Mind, Volume LIX, Issue 236, October 1950, Pages 433–460, https://doi.org/10.1093/mind/LIX.236.433

Juan Alberto Sánchez Margallo, CC BY 2.5 https://creativecommons.org/licenses/by/2.5, via Wikimedia Commons

“Turing test” Wikipedia, 26 Aug. 2001, en.wikipedia.org/wiki/Turing_test. Accessed 4 Sept. 2021.

“What is Natural Language Processing?” 2 July 2020, www.ibm.com/cloud/learn/natural-language-processing. Accessed 4 Sept. 2021.